Challenge #1 - A Proposed Solution

Designing a Holiday Rental Property Management Tool

Welcome to the 117 new subscribers who joined in the last week of the year!

Thank you so much for your early support!

If you have any feedback on this challenge series please let me know!

The Challenge

If you haven’t seen the challenge requirements and tried to design your own solution yet, please do that before reading my approach.

Designing your solution first will allow you to compare your approach to mine, see how we do things differently and maybe learn some new tricks or patterns!

Serverless Architecture Challenge #1

A founder has been contacted by a woman who runs a holiday rental business. She has 8 properties and is tired of trying to schedule and organise all of the properties using Google Calendars and trying to remember to manually email everyone involved.

The founder then found 4 other holiday rental owners who struggle with similar issues and would love an app to handle bookings and communication for visitors and staff (cleaners, repair, etc). They need an app to be able to integrate into the common holiday rental platforms (booking.com, airbnb, etc). Owners would also like a site where visitors are able to view all of their properties and rent them directly.

My Approach

When I looked through the functional requirements I thought of them in three main groups:

Property Management

Bookings

Communication & Scheduling

Each of these is obviously related but seems like logical domain boundaries to work with. If I was building this, I might create these as three separate microservices.

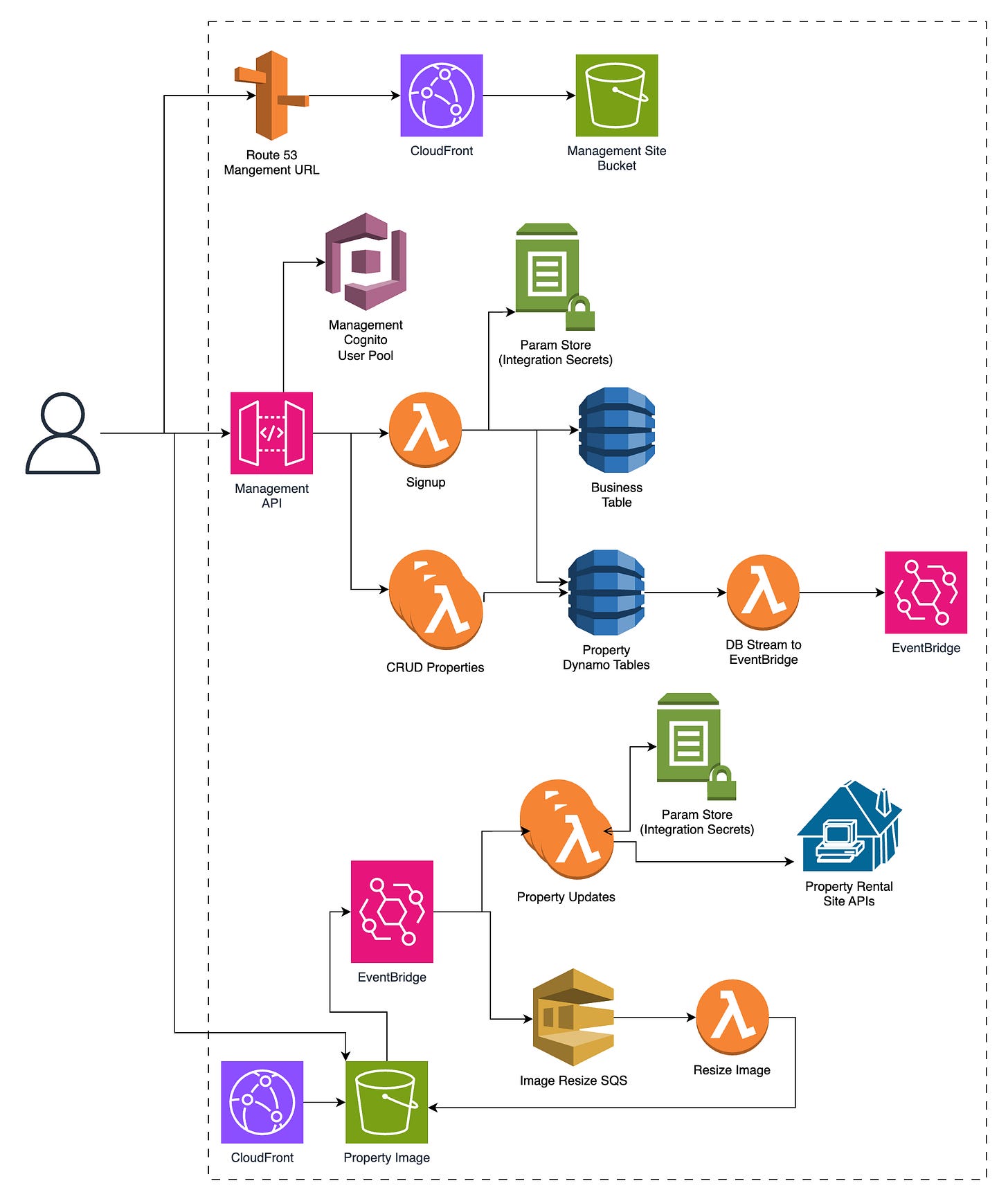

Property Management

This part initially seems the simplest - a management website, CRUD API and database - but grows in complexity the more you think about it.

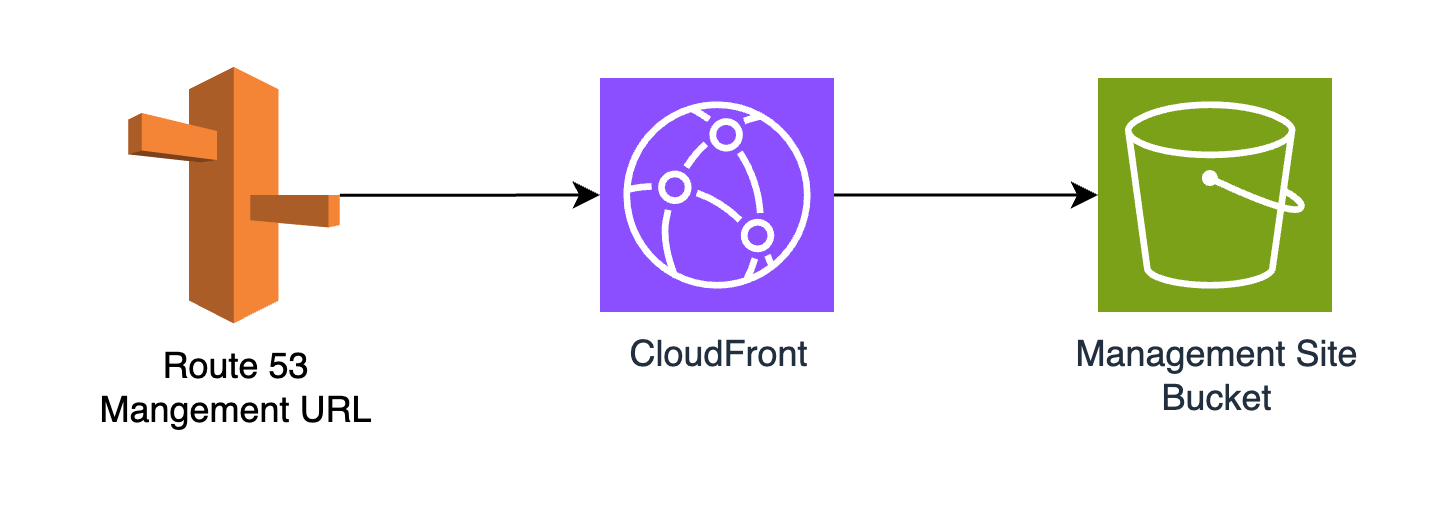

For the website, I’ve decided to keep it simple and have a react app, hosted in S3 with CloudFront and Route53 for a custom domain name.

Owner Auth

I’d use Cognito for authentication and authorisation, having a separate user pool for property owners. On business signup I would create a business ID and use that as an attribute on each user in Cognito. That way I can get it from inside the request and I don’t risk users sending the wrong business ID.

Using a business ID also allows me to have multiple users associated with a single business. I’ve not designed further access levels ( founder, manager, viewer, etc) but these could be added as another attribute or as groups in Cognito without too much effort.

Databases & Tables

I’ve gone with Dynamo because it’s very performant, cheap and the current access patterns aren’t too complex. We also get Dynamo streams which means we can send events to Eventbridge any time a property is updated. This will then feed into our integrations.

Property

pk: {businessId}

sk: property#{propertyId}

Business Integration

pk: {businessId}

sk: integration#{integrationDesitination}

Booking

pk: {propertyId}

sk: {date}#{bookingId}

pk2: {businessId}

sk2: {date}#{bookingId}

pk3: {vistorId}

sk3: {date}#{bookingId}

This allows for querying by property, business or visitor.

Backups

This is something I remembered to add based on the well-architected framework questions from last week.

Although it’s unlikely that the Dynamo table gets corrupted, the table could be deleted from the IaC. To be able to recover from that, I would use the point-in-time backup and recovery tool.

There is the new incremental DynamoDB to S3 export feature, but this would increase the complexity of the app and work if recovery needs to be done. We could also use both methods giving extra backup security, but given this needs to be maintained by the single founder I went against that.

Integrating with other holiday rental sites

We need to be able to send any of the changes to the business or the property to the other holiday rental sites. When a business signs up, they can select which of the sites they are on and paste in the two secrets for each integration (API key for sending data, secret for validating incoming events) which we store in param store.

Sending data to other sites

There is a Lambda listening for each of the events that need to be sent to the other sites (propertyDataUpdated, propertyImageUpdated, availabilityChange, etc). This lambda first gets the integration data for that business and then makes the request to each of the connected sites.

I’ve decided to split it on event type so that if a new event needs to be sent in the future, we’re not touching the existing code. If we add a new site we would have to change existing code, but I suspect that is far less likely.

If a request fails to be sent to any of the sites, it goes in a dead letter queue to be retried later.

Getting data from third parties

This is much simpler as it only needs to handle ‘booking changed’ and ‘message’ events. This is three API endpoints (one for each site) backed by a Lambda. That Lambda pulls the secret from param store, uses that to validate the event and then writes the data to the required table or fires the required event.

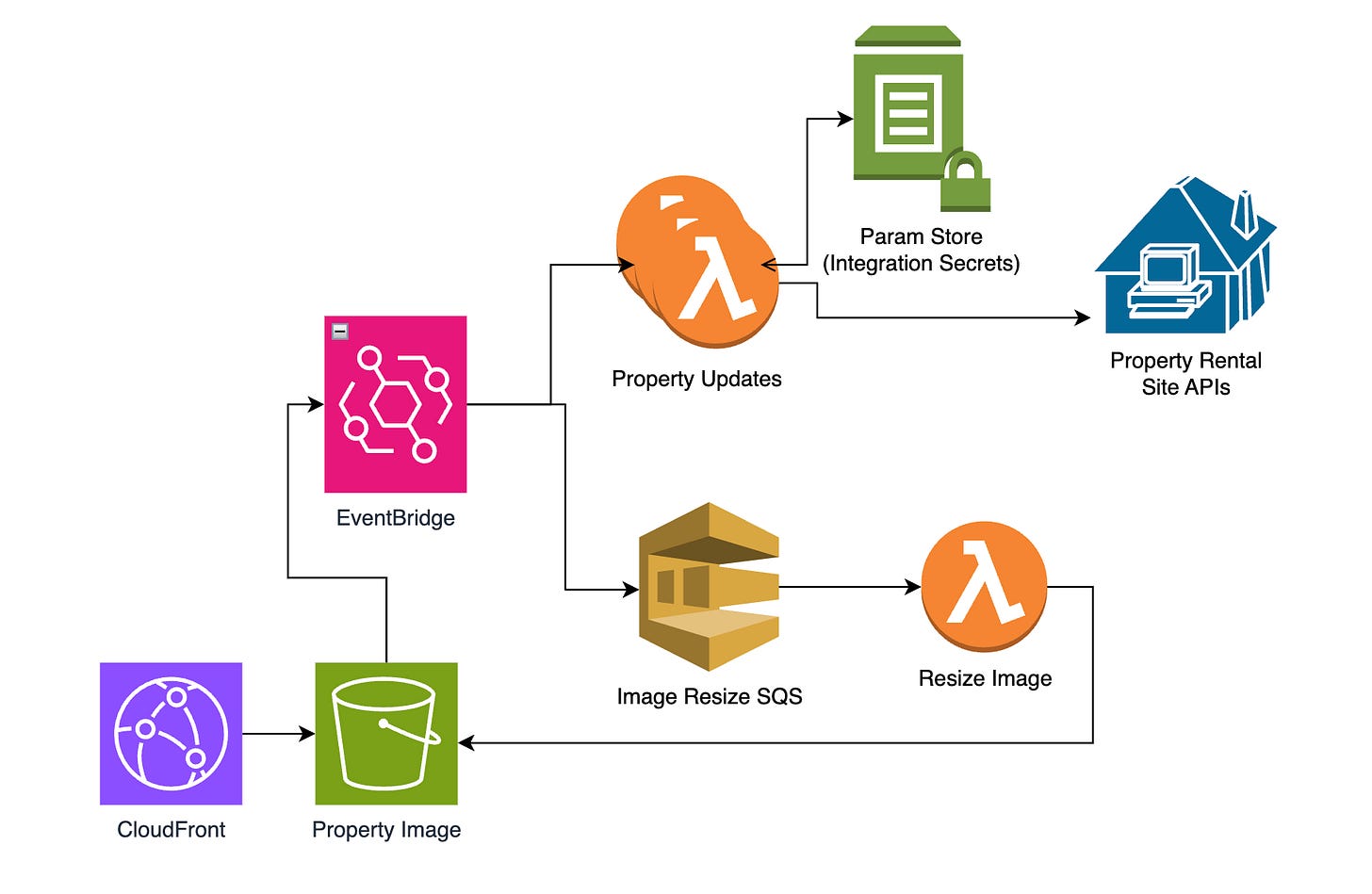

Handling Images

I would have a separate bucket for user-uploaded images, again with CloudFront for CDN. Given API gateway has a 6KB payload limit, I would use signed URLs for image upload.

But I don’t want to show the full sized images throughout our website so we need to make some thumbnails. I’ve gone with firing an EventBridge event when files are uploaded to the /fullsize folder path, listened to by an SQS queue. This feeds a Lambda that creates multiple lower-resolution versions of the image and compresses them to optimise page speed for visitors in the future. I would set a limit of 10 concurrent Lambda instances for this. These images don’t need to be instantly available.

I’ve used EventBridge as it allows for really easy fanning out of the events. For example, I would have another Lambda where we send the images to the other holiday rental integrations.

I’ve used an SQS queue as a user could upload 50 images for 20 properties all at once (unlikely but possible). If this invoked a Lambda directly then it would trigger 1000 Lambda instances at once. This would have one of two effects:

If we haven’t used reserved capacity it will mean other lambdas won’t spin up when a request happens. This would mean lots of other failed Lambdas.

Other lambdas are protected by reserved capacity, so we hit our account limit (1000 concurrent lambdas). Some of the image resizing lambdas would fail to launch, meaning we don’t have thumbnails or med-res images for certain properties.

Now we have the ability to sign up, connect to the other sites, create properties and upload images.

Bookings

Before jumping into diagrams, let’s work out how many bookings we need to handle at max capacity.

Let’s say 50 bookings a year per property, 400 owners with 20 properties each = 50 x 400 x 20 = 400,000, or 96,000 every 3 months.

There are two ways to take bookings:

Through one of the third-party websites

On our own website

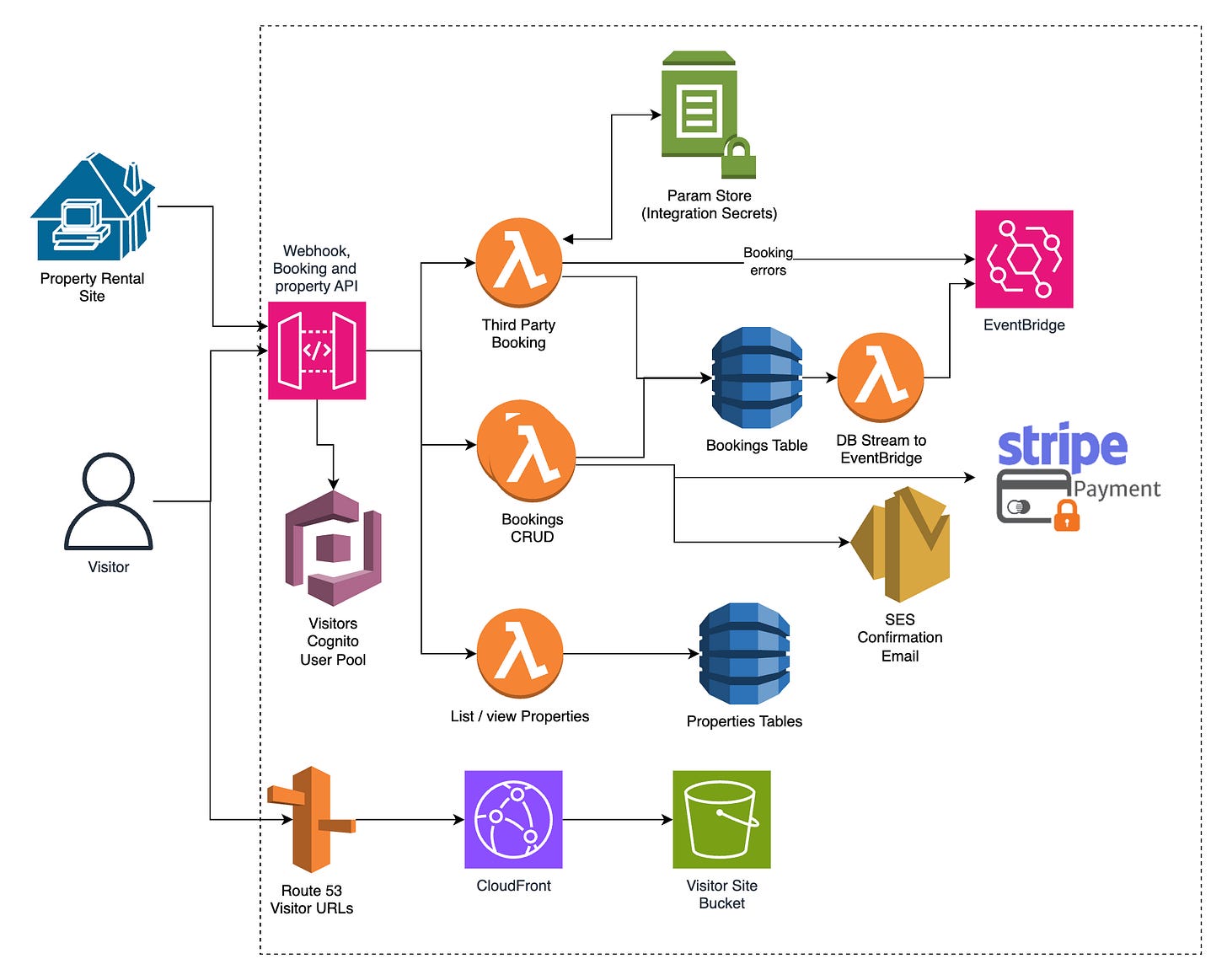

Third Party Site Booking

Let’s start with the third-party sites. In the first section, we integrated so that properties and availability are synced to the third-party sites.

They’ll manage their own users and payments, but we need to take any booking events and add the booking to our table. If there is an issue (double booking) we can fire a booking error event for the owner to resolve manually.

We’ll cover what happens after the booking is made later in this doc.

Our Site

This is going to be a little more complex. We now have to host a site for each of the businesses that shows all of their properties.

The site will be another react app, hosted in S3 with CloudFront. We’ll use Route53 to point each of the business URLs (mybusiness.holidayrental.com) to our CloudFront. When a business signs up, they can set the name they want and we create a R53 record.

For user auth, again using Cognito, but a different user pool. This means that once a visitor signs up to rent on one of our clients, they don’t need to create a new account to rent from another one. Some companies may complain about this. If there are large clients who need more isolation, we could create a user pool per business. I wouldn’t advise building that until there is a client who requires it and is willing to pay a premium for that. This could be an enterprise feature upgrade in the future.

Obviously, visitors would need to be able to view properties and availability before signing up. For this, I would have separate API endpoints which don’t require auth, but the booking endpoints would require a user to sign up or log in.

As per the requirements, payments for bookings would be done through Stripe. To keep things simple, I would advise starting with only pay in advance. Doing ‘pay later’ would be nice but would add more complexity when there are failed payments.

Once a booking is made, it would be written to the same booking table as with third-party site bookings. Since we made the booking we’re also going to send a confirmation email using SES.

Communication and Scheduling

With a booking made, we need to communicate the availability change to all other sites, book the cleaners and schedule all of the emails that need to be sent for the booking.

We’ll have a lambda listening to the Dynamo Stream from the bookings table which will fire an event into EventBridge for each booking.

There will be a few things listening for this ‘booking’ event. All of these will just be direct Lambdas. Bookings will be infrequent enough that we won’t get overloaded

First is a Lambda for communicating those availability changes to all other apps.

Second is a Lambda to book the cleaners.

Scheduling

Third is where we get to have some fun with scheduling. There are lots of ways to do scheduling but I would go with using the EventBridge scheduling. DynamoDB scheduling can only guarantee 48hr accuracy and if a visitor is waiting for the email with their key code that isn’t good. EventBridge scheduler has a 60s accuracy which is more than accurate enough.

Limits

There is a soft limit of 1M schedules. Assuming most people book 3 months in advance that is 96,000 bookings upcoming.

We need to schedule a few things before the booking: booking reminder, welcome email (with key code), cleaner email. 3 schedules per booking means that we might have 300k schedules live at most points. I would definitely have an alarm or metric for when the number of schedules goes above 750k so we can request a higher limit from AWS in time.

For each of these schedules, they’ll do relatively simple things. Either calling an API or sending an email.

We also need to think about what happens if a user cancels a booking. Luckily we can just delete the schedules. We just need to make sure that we name the schedules in a way that we can easily identify them.

Key Codes

This can be something as simple as having a key code table. We need to make sure that we don’t delete old codes until we are sure that the code has been changed. For this I have gone with a row per code, with the PK being the property ID and the SK being a timestamp. This way we can query all codes for a property.

When we send the ‘please update the code’ email to the cleaners, we create a new record with a new code and delete codes that are more than 4th in the list. This could be changed to leave them indefinitely but that isn’t an efficient use of database space. Storing 4 codes is a nice balance between having a backup incase we need an old code and being efficient.

Emailing

For this I would start with SES. It’s easy to use and has reasonable limits. Using any other email service provider would probably be fine as well. If it gets to the scale where cost or service limits are an issue, switching to another service provider would be fine.

Self Review Questions

Now I’ll go through the questions and answer them as best I can.

Security:

How do you secure your data in transit? https

How do you secure your data at rest? Dynamo is encrypted at rest.

How is your architecture protected against malicious intent? Having a good org structure and locked down user access prevents devs accessing prod data.

APIs - Cognito auth. Attributes on users to restrict access to your own properties. API GW has some level of built in DDOS protection. If more needed then add WAF in front.

Storage ( database and file storage ) - database is only accessible by API and Lambda. File upload is limited to image formats.

Reliability:

How would your infra react if an availability zone went offline for an hour?

Would your application still be usable? All of the services used are multi-az so it would still work.

Would there be any temporary or permanent loss of data? Only loss would be if a lambda went online whilst processing an event.

Would that be acceptable? yes, it works through an az failure.

How would your infra react if a whole region went offline for an hour?

Would your application still be usable? No, everything lives in one region. The sites would be cached in CloudFront but nothing else would work.

Would there be any temporary or permanent loss of data? Only data loss from currently running events/Lambdas. All data would be inaccessible but not lost. Events from third parties wouldn’t be processed - booking changes wouldn’t be accounted for.

Would that be acceptable? yes, the probability of a full region outage is very small. Building a multi region architecture would be, in my opinion, overkill. It would overcomplicate and slow down future development.

How would your application react if your traffic increased 10x in 5 minutes? (An advert plays on tv) - 8000 bookings / week. Assume 3% conversion and 25% of bookings on our own site. Users/min = 8000 / 3% x 25% / (7 x 24 x 60) = 6.6 users/min. 3 pages viewed per min and browse for 5 mins = 99 requests/min.

Would your compute and database scale up to handle this quick increase in traffic? - going from 1.5 to 15 requests a seconds wouldn’t be an issue. You might get to 3 or 4 concurrent Lambdas.

Might you hit some service limits? No.

If a developer added a recursive bug to the code that caused memory usage to spike, how would your application handle it? The lambda that contains that code would run out of memory and crash. This would make that function useless, but all of the other functions would work as normal. The joy of isolated functions.

What would happen if your database was corrupted or accidentally deleted? - The tables are all configured with Point-In-Time recovery. I would also ensure that tables are configured with ‘retain’ as their deletion policy.

Performance:

Might anything in your application cause user requests to fail to meet latency requirements? - No, simple APIs should return sub 1s response times with ease.

How do you configure and optimise your compute resources? (EC2 instance type / Lambda memory) - Using Lambda instead of ECS/EC2 means that we don’t need to monitor CPU/RAM usage and autoscale as that’s done for us. I would default to using 1GB Lambda and then use the Lambda Powertuning to optimise further. I would have alarms for API Lambdas with a response time of 2s. If there are any that trigger that alarm we can re-run the powertuning or increasing RAM until an acceptable response time is reached. This is very unlikely though. For the image resizing a balance of cost to performance is balanced up.

What should the team be monitoring to ensure optimal performance? - API response time, dead letter queue length for failed events, any failed payments.

Cost Optimisation:

What is the rough cost to run this application?

I created a spreadsheet to roughly estimate the costs at full capacity. I got half way through and realised that the CloudFront and Cognito were 10-100x the cost of the compute and database. Given this is an estimate it wasn’t worth calculating every single lambda and API endpoint.

Total cost =~ $2238 / month. That might seem like a lot and you might jump to change your CDN or swap Cognito for some other auth, but consider the cost relative to revenue.

At this capacity we’re handling 8000 properties @ $50/month = $400,000 / month. This makes our infra costs 0.5595% of our revenue, or 99.4% profit margin (not accounting for other expenses). Why spend thousands of $ to save $1k/month when you could increase sales by 1% and increase revenue $4k/monthWhat is the most expensive component of your application? - CloudFront is one to watch as it often isn’t accounted for. Cognito is the other as every person who buys through a our hosted pages needs a cognito user.

What designs/patterns have been implemented to optimise costs? - Use of Serverless, resizing and compressing images to reduce CloudFront costs.

Sustainability:

How does your architecture make the most of user usage patterns to improve sustainability? - Use of Serverless increases utilisation on the hardware, reducing total energy and material usage.

Project specific questions / things to watch out for

How accurate is your scheduling method? If a visitor gets there but still hasn’t been sent the key lock code then they’ll be very annoyed. (DynamoDB TTL method for scheduling is only guaranteed to 48 hours).

- Using EventBridge scheduler means 60s accuracy. That is more than enough for this use case.What if the email to the cleaning crew fails to send? Have you overwritten the current code so no-one can unlock the key box? - We don’t delete codes until the code has changed 3 more times.

If you’ve used DynamoDB, what is your schema so that you can effectively query the bookings by property or by owner? - A secondary index will be used to allow the querying on property, owner or visitor

What ongoing maintenance tasks are there to maintain this app? Is this too much for the founder to handle on their own?

There should be minimal maintenance. Most of the ongoing work will be monitoring for errors and usage limits.How are you ensuring that one of the rental owners can’t access the properties and data of another owner?

- Property owners have the businessID as an attribute on their cognito user. This can be accessed inside any API Lambda that uses auth. This is what is used to query on properties and bookings instead of a owner passing their businessId manually, which could be falsely passed. In the Lambda a role is created with permissions that are limited to dynamo records with that business IDAt the requested scale the application might be over 300,000 bookings a year (400 owners, 15 properties, 50 bookings per year). How does your architecture handle this? Take note of limits in your email service provider and auth platform.

Cognito can handle this level of throughput. SES has a limit of 10 emails per second. With ~800 bookings a day, even with peaks we would not reach the 10 emails/s. If the platform grew more, we could seek other email service providers who can handle higher traffic.You are asked to add a feature. When there is a cancellation or a booking slot that has not been booked, the owner wants a button to send an email to all people who have previously stayed there. How complicated would it be to extend your current architecture to handle this?

This would be another endpoint. When a booking is made we could have another table with visitor:property mappings. This could be queried to get all other visitors who had stayed at the property before. We would need a queue system as this could be hundreds of visitors, hence would hit the SES limit if we sent all at once.A hotel chain hears about the app. They have 80 hotels, each with around 400 rooms. The hotel chain needs more fine access control. They need three new roles: chain manager, hotel manager and hotel employee.

How complicated would it be to extend your current architecture to handle this?

In the most part, the architecture should not need to be changed too much. Thre would need to be some considerations for:

- getting all ‘properties’ (think rooms) for a given business would need to change. This would return 32,000 rooms. This would require a change to both the management app and the visitors app.

Access patterns would need to change as well, with extra roles. This would require a change to the

I might suggest a completely dedicated deployment of the application for this business, adding to their data isolation.Are there any known limitations in your current architecture that could become an issue with increased scale?

Email limits would need to be calculated to see if a new email provider is needed.

So that’s my architecture. Hopefully it was useful and you can compare the design decisions to yours.

I really want to remind you that although I’m a Serverless Obsessive, I am not perfect. There could definitely be bits of this architecture that could be done better or even errors in it. If you designed part of this challenge differently then compare each of our designs.

What pros and cons do each have?

Will one be cheaper/faster/simpler now but harder to extend later on?

There are no perfect answers.

And if you think I have made a mistake, please let me know. If there are things I can learn from other people’s architecture then even better!

Remember if you want to submit your designs, there is a Github repo where you can push your designs and have discussions in the PR.

Fork the main repo (https://github.com/SamWSoftware/ServerlessArchitectureChallenge)

Clone your repo locally

Add a folder to

/{Challenge Number}/submissions/{Your Name}Put whatever you want into that folder. Ideally an architecture diagram (png and/or draw.io would be great) and some notes on how the architecture works and your process to get there

Push your changes

Create a PR into the main repo

If you want to view and discuss other people’s submissions then check out the open PRs.

Finally, I want to say that if you’ve made it this far, thank you so much. I never expected so many people to be excited about designing serverless architecture. We’re at 245 subscribers already and it’s only 3 weeks old!

If you have any feedback on the challenge, the self-review questions, my design or have an idea for a future challenge, please let me know. I look forward to getting to chat with you!

Happy New Year!

Sam